Overview

How does one effectively build real time analytics on data that is evolving at greater than 6,000 tweets per second? How does one take advantage of the wide array of data provided through the web, which may contain over 4.74 billion pages? In each of these cases you definitely are not going to download a zip file or csv for analysis.

In this lab, you will gain some initial understanding of how to deal with data from APIs and the unstructured data that makes up the web. While skills to fully take advantage of something as complex as the Twitter API or Web Scraping could be a class on their own. Before discussing these though, let’s review some of the fundamental technologies involved with APIs as well as some

Representational State Transfer (REST)

Rest has a number of different properties that have solidified its place as the most effective way to share data over the web.

* Client-Server. The server in the REST model accepts a request, is responsible for all data storage, and are not concerned with the user interface. The client can include other web applications, mobile devices, or others servers making integration calls. In many cases, restful apis are built in such a way that enables backend code reuse among a number of frontend devices.

* Stateless. While an application often maintains the state of a user, restful APIs are designed to be single use. In other words, you won’t a a state from anything that you have previously done with the API that will impact future results.

* Cacheable. A cache is a temporary storage that can be utilized to improve performance, as caches don’t connect with the server again. This won’t be appropriate for all APIs though so there should be some mechanism to specify whether cacheing is appropriate.

* Uniform interface.

Twitter Background - The Hashtag

One of the ways of filtering through the ocean of data is through a hashtag. If you’ve sheltered yourself from social media hashtags are simply terms with a #, in front of them. For example, hashtags for #rpi, #analytics, #bigdata, etc. are ways of grouping tweets.

This is actually a rather interesting way of adding structure text fields, providing an index of related tweets without implying a formal structure. These can be important tools for brands to engage their followers or track sentiment in realtime.

Consider Television, an area where hashtags matter. Hashtags and dynamic interactions among users increase the value of live viewing of the show, increasing the ability to advertise. It also facilitates real time feedback that could have the same outcome as a focus group. From an interview covered on twnewscheck.com, Kristen Variola, director of social media for TLC stated, “We always look and see what people are saying about our shows and it helps us inform our strategy.” It also enabled the fans a way to engage with the television product outside of the viewing channel, even when new episodes are being released. Thus, this becomes an extension to the value the consumer obtains from watching.

OAuth. Why We Need IT.

Let’s say you are Twitter and you want to enable developers to build products on top of your ecosystem. One option would be to have users enter their Twitter password into the other applications, that then use an API or even a web crawler to automatically enter it into Twitter. What happens though if there is a security breach or evan a malicious app creator. Your entire platform could be at risk.

How do you build security upon a platform that needs to be open enough so that people can build flexible and complex integrations on top of it that allows for read and write privileges yet puts control in the hands of the user?

Enter OAuth.

Getting Started

The first thing that you need to do is to create a Twitter account via the Twitter Website. This is a relatively painless process. Go ahead and put out a tweet “@jasonkuruzovich I’m working on Lab 3. #MGMT6963 #RPI #Analytics http://goo.gl/PJx19X” (You can add anything else with the remaining 52 characters!)

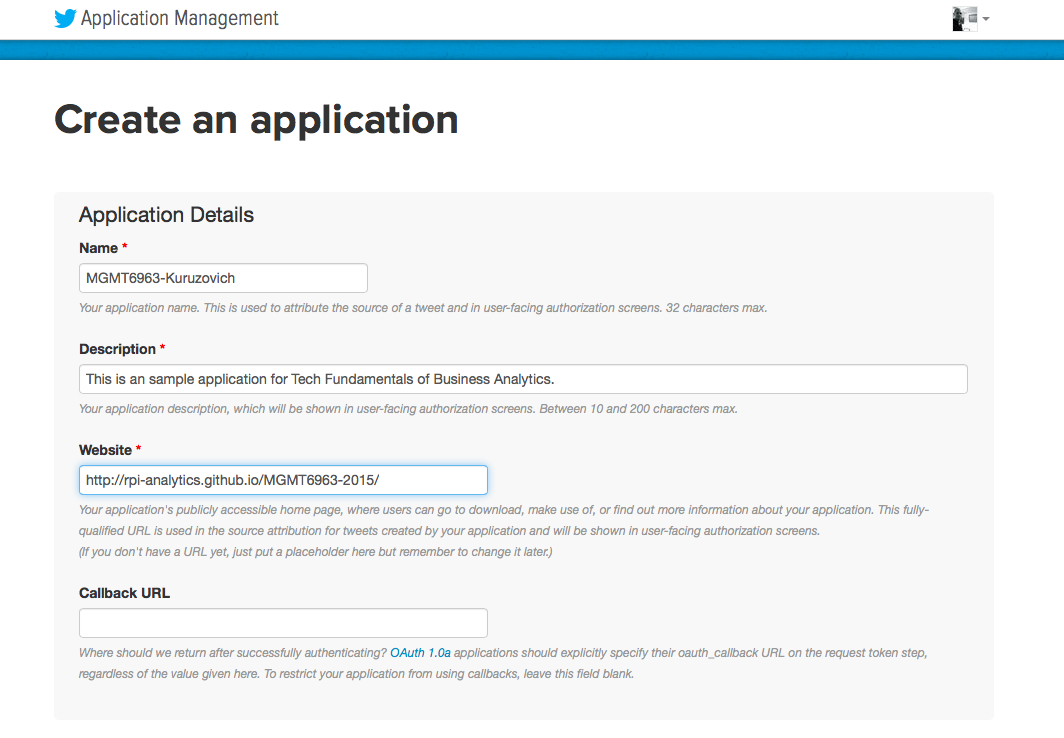

The next thing you will need to do is to create a Twitter App. Go to the Twitter Developer App page https://apps.twitter.com and click on the Create New App button. This will give you your OAuth keys.

Then fill in the form with something like this for your first application. Note. You will have to associate your phone number with your twitter account. If you don’t have one, you can probably share accounts with a neighbor, we just can’t share them as a class.

The rest of the work is going to be in an iPython notebook. There are a few ways to access it.

Download Twitter Notebook

View Twitter Notebook

Next upload it to Jupiter, running your local virtual machine. I know a number of you have had problems with the VM. If it isn’t working try https://wakari.io

Questions.

-

Describe the structure of the content of the output from the trends.place.

-

What might be the value of understanding Twitter trends from specific areas of the world? Give one potential use case.

-

What is meant by following the cursor, in the API example following tweets regarding analytics.

-

Pick a specific hashtag different from analytic that provided in the example. Provide a list of related hash tags, users, and words using the analysis specified.

-

Adjust the analysis to also include the most frequent URLs. Provide a screen shot output of the most frequent URLs in addition to the Python code used.

Web Scraping

Download Webmining Notebook

View Webmining Notebook

- Pick a structured web page in which you would like to extract some information. Try not to pick anything that is too complex. Use what you understood from the reading and lecture to describe a the process of extracting information from a web page using beautiful soup.

Header photo salve-a-terra–twitter_4251_1280x800 by Danilo Ramos